Building HOPCOMS Fruit/Veg Rate API using CouchDB

I love HOPCOMS stores. There are quite a few stores across Bangalore. They are popular for fresh vegetables and fruits.

The Horticultural Producers’ Co-operative Marketing and Processing Society Ltd. or HOPCOMS was established with the principal objective of establishing a proper system for the marketing of fruits and vegetables; one that benefits both the farming community and the consumers. Prior to the establishment of HOPCOMS, no proper system existed in Karnataka for the marketing of horticultural produce. Farmers were in the clutches of the middlemen and the system benefited neither the farmers nor the consumers.

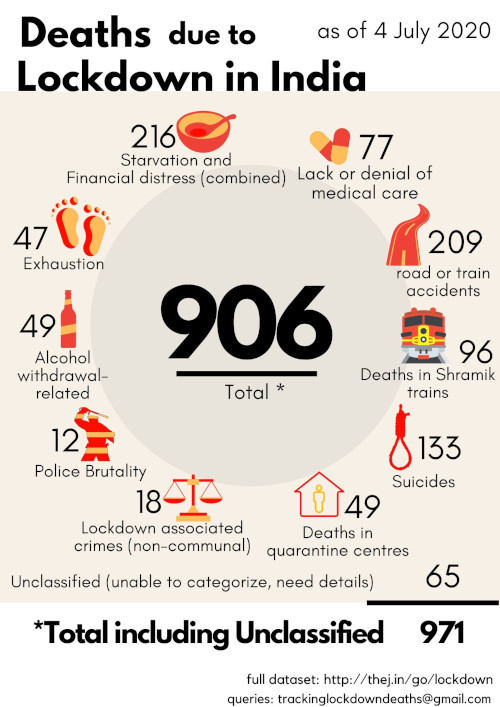

On their site they publish rates of vegetables on every weekday. I used to visit their website to check the rates once in a week or so. But I always wondered if HOPCOMS had a proper API what would I use it for? I could have an alert on Onion rate based on historical data. Let’s say 10% increase in onion rate in last one month or a simple question to Alexa for Tomato rate.

Since HOPCOMS doesn’t have an API, I built one. I have a simple script that pulls data once a day from HOPCOMS and stores it as a JSON document in CouchDB. Any access to API will pull the data from CouchDB. That way no extra load on HOPCOMS server. Since it has run for months now. I can confidently write about it. It’s a simple API which gives the list of item codes and rates for a given date. It also has meta data API to give the item code to item name listing.

The code with some historical data is on GitHub. If you update daily.py with couchdb details it should be able to pull the data. Best would be setup a CRON to pull the data everyday.

Since its built on CouchDB, API is web accessible by default. For example: Jan 16, 2018 data will be available at https://mycouchdb.ext/hopcoms_daily/20180116 where 20180116 is the date in yyyymmdd format and mycouchdb.ext is where your couchDB is running. The data is just key value pair of item code and rates.

{"_id":"20180116","_rev":"1-6177e5919a7f6a22dae47b44e4ff5a18","1":110.0,"4":198.0,"7":189.0,"9":240.0,"11":29.0,"12":62.0,"13":80.0,"14":54.0,"19":58.0,

"20":75.0,"21":86.0,"22":40.0,"27":100.0,"28":118.0,"29":142.0,"31":75.0,"32":425.0,"36":148.0,"37":32.0,"39":77.0,"40":76.0,"42":34.0,"43":48.0,

"44":100.0,"46":80.0,"48":60.0,"49":148.0,"51":53.0,"52":57.0,"54":96.0,"55":68.0,"56":65.0,"57":95.0,"58":70.0,"59":160.0,"63":120.0,"66":21.0,

"67":27.0,"69":39.0,"70":42.0,"78":20.0,"79":20.0,"81":80.0,"84":52.0,"87":88.0,"88":250.0,"101":60.0,"102":19.0,"108":42.0,"109":26.0,"110":34.0,

"112":24.0,"113":42.0,"114":40.0,"115":22.0,"116":16.0,"117":17.0,"121":12.0,"122":42.0,"123":37.0,"125":82.0,"126":50.0,"127":30.0,"131":60.0,

"132":45.0,"135":37.0,"136":36.0,"137":28.0,"139":14.0,"140":80.0,"142":26.0,"145":34.0,"147":148.0,"149":70.0,"150":80.0,"153":24.0,"155":54.0,

"156":29.0,"157":30.0,"158":60.0,"160":200.0,"162":188.0,"163":56.0,"167":140.0,"168":80.0,"171":13.0,"173":16.0,"174":23.0,"179":18.0,"180":80.0,

"181":26.0,"182":28.0,"183":18.0,"184":74.0,"185":56.0,"186":59.0,"187":46.0,"190":10.0,"191":40.0,"193":65.0,"196":50.0,"201":25.0,"202":66.0,

"203":38.0,"204":58.0,"205":40.0,"206":40.0,"208":34.0,"213":38.0,"215":56.0,"218":16.0,"220":4.6,"221":33.0,"226":32.0,"227":145.0,"250":45.0,"251":25.0}

You can get the item code to item name from hopcoms_meta accessible at https://mycouchdb.ext/hopcoms_meta/item_details. Data again is a simple JSON

{

"_id": "item_details",

"_rev": "3-b3808e206cd2263b5ae67e7fd543f245",

"1": {

"name_en": "Apple Delicious",

"name_kn": "ಸೇಬು ಡಲೀಷಿಯಸ್"

},

"2": {

"name_en": "Apple Simla",

"name_kn": "ಸೇಬು ಶಿಮ್ಲ"

},

...

...

...

}

So using both you can figure that on 20180116 rate of Apple Delicious was INR 110. It’s that easy. You could cache the item_details. You could do other CouchDB key based queries to get data worth of a month or year or between specific dates etc. I have written the initial API documentation. Please send/add your comments. As of now you have to self host the API. Python code below shows how simple it is to pull the data and update CouchDB.

Here is the code that powers

import requests

import json

import csv

import datetime

import couchdb

from BeautifulSoup import BeautifulSoup

all_items_load = False

all_item_list = {}

db_full_url= ""

couch = couchdb.Server(db_full_url)

hopcoms_meta = couch["hopcoms_meta"]

if all_items_load:

with open('item_list.csv', "r") as csv_file:

reader = csv.reader(csv_file)

header = True

for row in reader:

if header:

header = False

continue

label = (row[1]).strip()

label = label.replace(" ","")

label = label.lower()

all_item_list[label]=int(row[0])

try:

if hopcoms_meta["item_codes"]:

pass

except couchdb.http.ResourceNotFound:

print "add"

all_item_list["_id"]="item_codes"

print str(all_item_list)

hopcoms_meta.save(all_item_list)

else:

all_item_list = hopcoms_meta["item_codes"]

#print str(all_item_list)

hopcoms_daily = couch["hopcoms_daily"]

web_data_url = "http://www.hopcoms.kar.nic.in/(S(vks0rmawn5a2uf55i2gpl3zo))/RateList.aspx"

table_x_path ="""//*[@id="ctl00_LC_grid1"]"""

total_data = {}

r = requests.get(web_data_url)

if r.status_code == 200:

html = r.text

soup = BeautifulSoup(html)

date_span = soup.find(id='ctl00_LC_DateText')

date_span_text = date_span.text

date_span_text = date_span_text.strip()

date_span_text = date_span_text.replace("Last Updated Date: ","")

date_span_text_array = date_span_text.split("/")

final_date = date_span_text_array[2]+date_span_text_array[1]+date_span_text_array[0]

print str("Updating for ="+final_date)

table = soup.find(id='ctl00_LC_grid1')

#print str(table)

for tr in table:

if str(tr).strip() == "":

continue

if len(tr.findChildren('th')) > 0:

continue

#six elements

row = 0

row_data = {}

label1 =""

data1=0

label2 =""

data2=0

for th in tr.findChildren('td'):

for span in th.findChildren('span'):

content = str(span.text).strip()

row = row + 1

if row == 1 or row == 4:

continue

if row == 2 and content != "":

label1 = str(content)

if row == 3 and content != "":

data1 = float(content)

if row == 5 and content != "":

label2 = str(content)

if row == 6 and content != "":

data2 = float(content)

if label1.strip() != "":

label1 = label1.replace(" ","")

label1 = label1.lower()

item_id = all_item_list[label1]

total_data[item_id]=data1

if label2.strip() != "":

label2 = label2.replace(" ","")

label2 = label2.lower()

item_id = all_item_list[label2]

total_data[item_id]=data2

dt = datetime.date.today()

_id = '{:%Y%m%d}'.format(dt)

print str(_id)

if str(final_date) == str(_id):

print "MATCHES final_date and _id"

total_data["_id"]=_id

print str("----------------------------------------------------------")

try:

if hopcoms_daily[_id]:

print "exists"

x = hopcoms_daily[_id]

print str(x["_id"])

print str(x["_rev"])

total_data["_rev"] = x["_rev"]

except couchdb.http.ResourceNotFound:

print "add"

total_data["_id"]=_id

print str(total_data)

hopcoms_daily.save(total_data)

else:

print "DOESN'T MATCH"

Also I can give access to my API server to some of you. Considering I run a small server it will be very few. Send me an email. I will send you the details. No promises. In the mean time, tell me how will you use the API?

Very interesting. Thank you for details.

Interesting

I was looking for Hopcoms data and weather + Rain data to get some kind price/Weather co-relation. And stumbled upon your blog.

Nice!!