Building an Alexa Skill using OpenWhisk and CouchDB

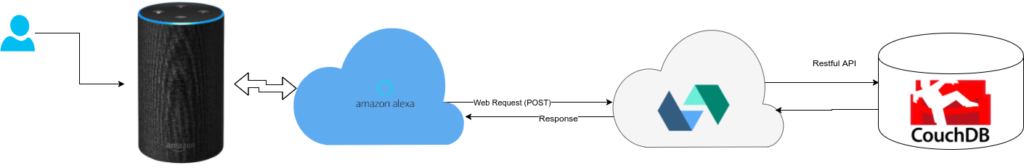

Smart Speakers are becoming popular these days. I see Alexa or Google home everywhere, Voice is an interesting technology and I think it’s going to become very popular amongst younger and elderly community. This excites me about this technology. Here in this tutorial we will create a simple Alexa Skill using OpenWhisk as our serverless platform and CouchDB as our database.

The flow is simple. We will create an Alexa Skill which will connect to our serverless web service on OpenWhisk. This open OpenWhisk will talk the language of Alexa. The OpenWhisk will call our CouchDB database as and when required. It can be used retrieve the information the user requested or store user session values etc. In the present case we will use it to retrieve onion rates using our CouchDB based HOPCOMS API.

Follow our previous tutorial to setup your own CouchDB HOPCOMS API. You can install your own CouchDB or use hosted provider like IBM.

I have already written a tutorial on how to get started with OpenWhisk on IBM. You can use the tutorial to get started. Here we will create a project called hopcoms_rate to implement the web service.

I am going to repeat a couple of steps for the ease of following this tutorial. You can find the details in the previous post referred before.

Clone the project https://github.com/thejeshgn/openwhisk-fabfile-deploy and change action_name in fabfile. Next we will edit our __main__.py to get the data from the HOPCOMS and return the response in a way Alexa wants.

In the present case we are just responding to the call “HOPCOMS” with the rate of Onion. In future one can extend to include the fruit or vegetable intent. Then one can ask “HOCPMS APPLE” and Alexa will speak out the price of Apple. Then we will have to process the parameters sent by Alexa, as of now we don’t.

import requests

import datetime

def main(params):

now = datetime.datetime.now()

key = now.strftime("%Y%m%d")

couch_url = params.get("couch_url")+key+key

r = requests.get(couch_url)

onion_rate = None

onion_type = None

if r.status_code == 200:

data = r.json()

if "163" in data:

onion_rate = data["163"]

onion_type = "Onion"

elif "165" in data:

onion_rate = data["165"]

onion_type = "Small Econony Onion"

resp = {}

resp["version"] = "1.0"

outputSpeech = {}

outputSpeech["type"]="PlainText"

if onion_rate:

outputSpeech["text"]="Today's rate of "+onion_type+" is rupees "+str(onion_rate)

else:

outputSpeech["text"]="We have not received onion rate today. Check later."

response_data = {"outputSpeech":outputSpeech, "shouldEndSession": True}

resp["response"] = response_data

return resp

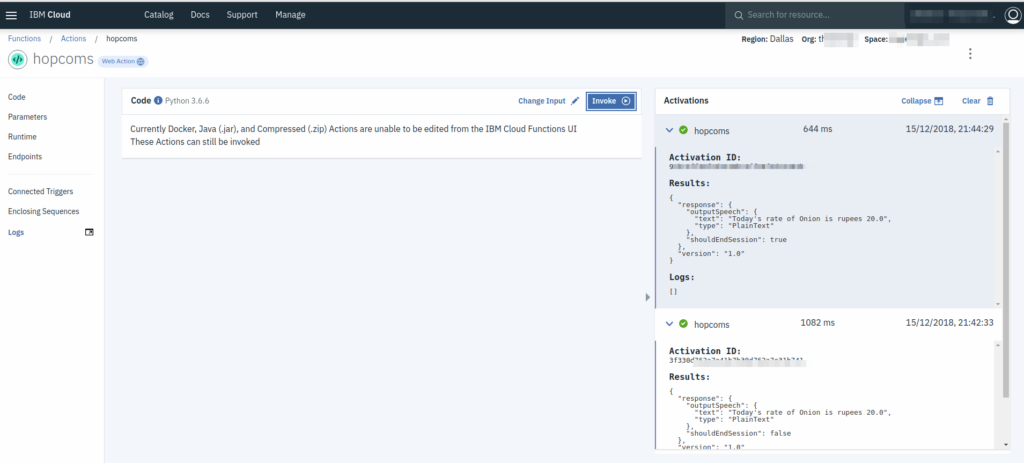

Here the response is in JSON format. You can look at the Alexa Speech Response to see how it should be formatted. It’s fairly straight forward. The code above is also quite straight forward if you know HOPCOMS API. Once you are happy with the code, you can create the action by calling

#setup virtual env

fab setup

#package the code

fab package

#create the action

fab create

Whenever you update the code, you will have to create the package again and update again.

#package the code

fab package

#create the action

fab update

Now login to your IBM OpenWhisk console and try to run the code by invoking. You should get the response.

Please remember to set the couch_url parameter in the OpenWhisk dashboard. It will be of the format https://username:password@couch_url/hopcoms_daily/"

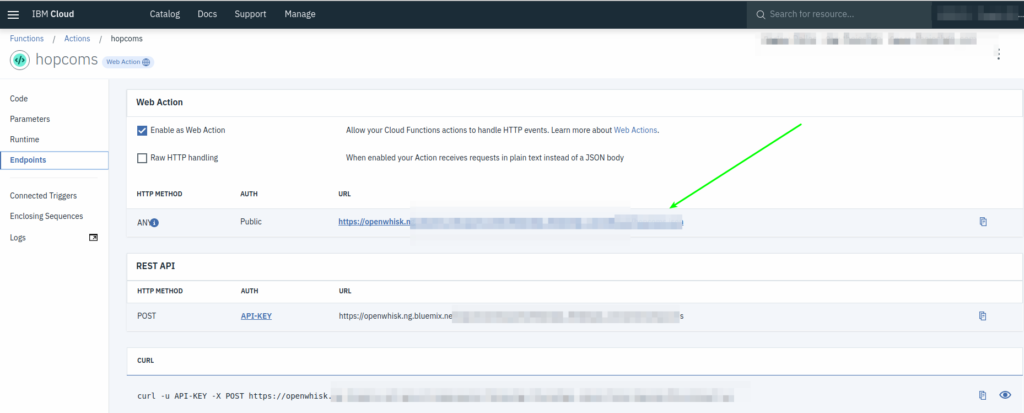

Once everything is working, let’s make this a web action and get the URL endpoint. Since this is not critical, I have made it a public web action endpoint. If you want you can enable a API Key based endpoint. This depends on how secure you want your endpoint.

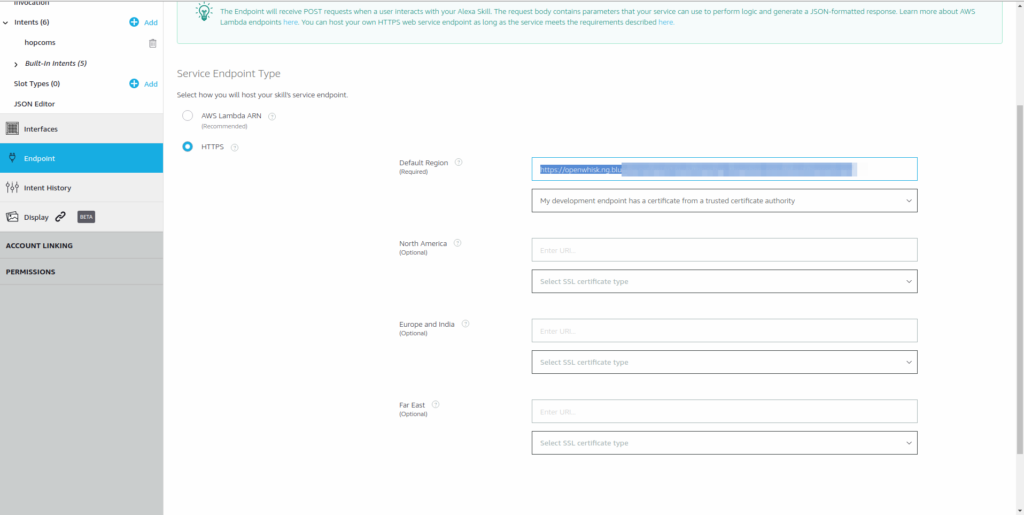

Next step we will create a skill on Amazon Alexa Choose a custom Skill, set Skill Invocation name as HOPCOMS, intent as hopcoms and sample utterance as hopcoms. Then set the endpoint for the intent to your OpenWhisk JSON API endpoint.

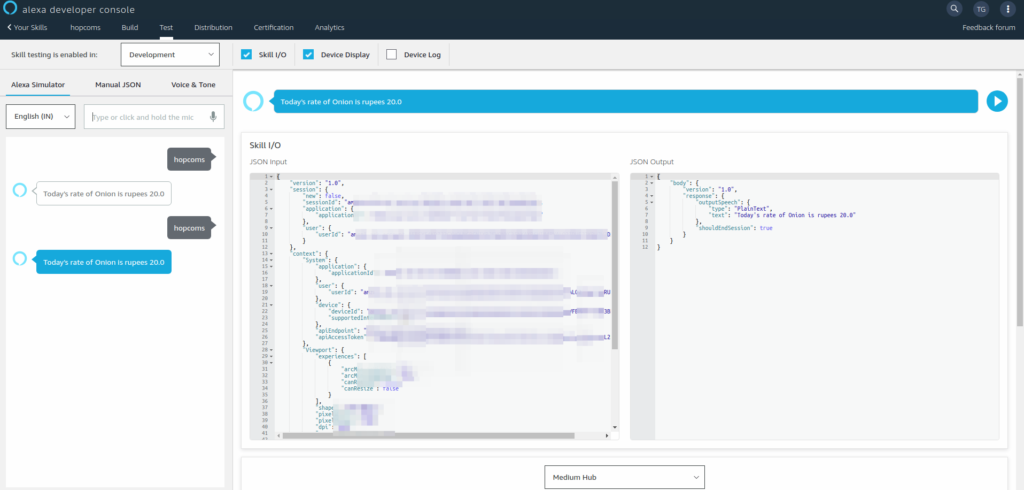

Once this is done. Build the model and test in dev mode. You should be able to test it in the browser and also on any device you have logged in.

There you have your simple Alexa Skill running with the backend at IBM OpenWhisk and CouchDB. You can keep improving the backend as you have all the power.

I am trying to make a full version of HOPCOMS Alexa API. Where you can ask for the rate of specific vegetable or fruit. I will keep blogging about the steps. This is just step one.