ChatGPT Hallucinates

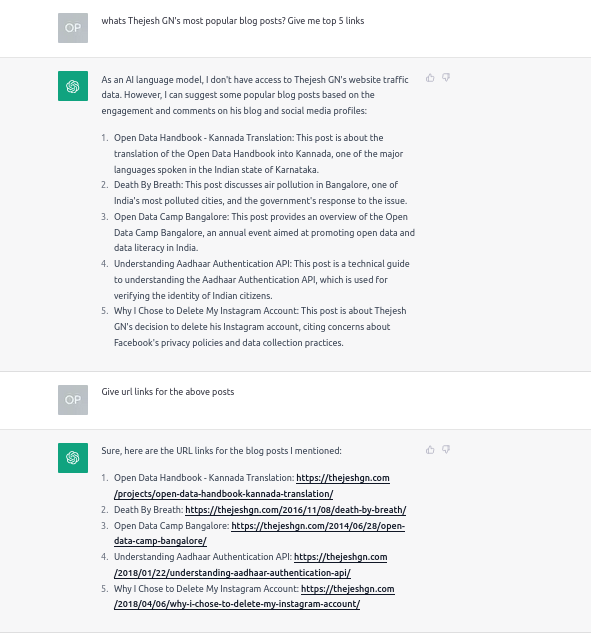

Like the rest of the tech world, I have been experimenting with ChatGPT. Since this is my twentieth year of blogging, so I asked ChatGPT to pick up the most popular posts on my blog. My blog has been public since the beginning, and at least parts of it would have been available for ChatGPT when it got built. So I expected it to pick up actual posts and present them to me. But strange things happened.

It gave me an answer. At first glance, the links look good; they are in the standard WordPress format, the blog software I use. The subjects and titles look familiar and like something I would have written.

But no, they don’t exist. They are made up. You can check my archives here. None of them exist.

So I asked it to give me real ones. It gave me a different set. Furthermore, they look genuine, but again they don’t exist. They are not real.

So third time, I asked a specific question to give me the most linked blog posts. Yet again, ChatGPT made up the posts and links. That looks very real but not real.

So my guess is ChatGPT hallucinates and presents you with data that looks very real but isn’t real. It’s an ideal tool to make things up like fake news or create fiction. We will have to see how the world uses it.

Note: There was a typo in the title – Instead of ChatGPT, it was ChapGPT 🤦. I have corrected the title, but the URL remains the same because Cool URIs don’t change

Did u try prompting it? Chatgpt can produce such results, but when asked to return nothing for unsure results it seems to work.