Weekly Notes 09/2025

My neck is doing okay. Resting, i.e., not looking at the phone but sleeping, has greatly helped. I’m still wearing the cervical collar, as the doctor advised. I’ve also slowed down on the work front this week because of it. Hopefully, I will be able to catch up next week.

- We went out for lunch with friends at Kuuraku, Bangalore. I liked the starters, though I thought they were expensive.

- I downloaded all my Kindle books. I had stopped buying books on Kindle a long time ago. Now, I am kind of completely out of that ecosystem. I am still looking for a decent portable e-ink device. Ideally, a 6-inch one that runs Android so that I can configure it however I want. Something Boox Palma, or maybe fix my old Kindle keyboard and jailbreak it.

- I got tagged by Sathya and, as a response, wrote – A challenge of blog questions.

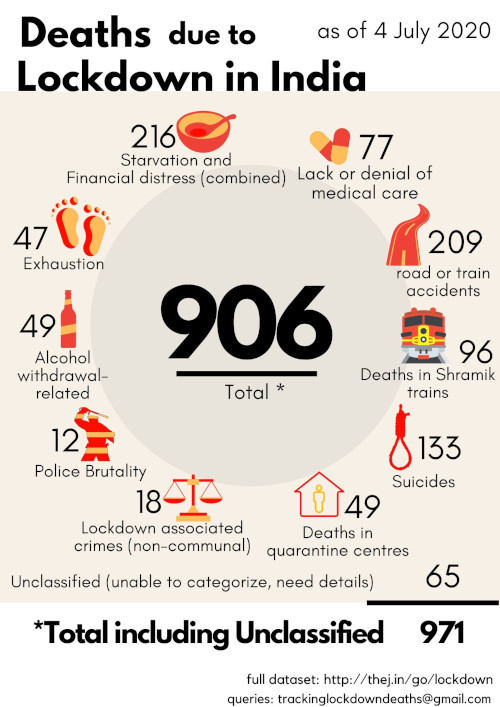

- It’s good to see people discussing air quality and AQI because of Bryan Johnson. I don’t know why people have taken so long to understand air pollution is killing us. There have been some folks who have been raising concerns for more than a decade now. It’s going to hurt the middle and lower middle class like any environmental disaster.

- A cartoon can get a century-old news magazine – Vikatan’s website blocked by the government without notice. We have a long way to go as a democracy.

- Appa is here; he has a bit of a fever due to travel.

- I updated the bengaluru_airport_estimated_wait_time scraper to get the correct data. They had updated their backend to include origin validation. I had not looked at the status of the scraper for a long time. It failed sometime in 2023, so we lost the complete 2024 data. Anyway, it’s up, and data is being collected now. The data format has also changed; now that we have T2 and T1 is all domestic. The readme and the load.sh needs an update to handle the new format data. I will do it once I have collected some data. Reminder: Set up status alerts for all my public scraping jobs.

- I have been using qwen2.5:0.5b, the smallest qwen2.5 model, locally using Ollama and Open WebUI. It runs pretty well, even on my CPU-only machine. Currently, I am using it to summarize the documents, ask questions about them, etc. I am very impressed. I want to try qwen2.5-coder:0.5b next to see what it can do. I am fascinated by small (i.e., less than 1 GB) large language models 😄 I want to push them to their limits and see everything they can do.

Hi Thej, great stuff.

Air pollution is a huge problem and its been described as “the new smoking” in terms of how it will increase in our awareness as a huge problem. We get many months of poor air quaility in Chiang Mai, and I’ve gone the DIY route of buying $5 HEPA filters (~30x30cm) and straping these to normal fans. They work well (I have a Kalterra laser egg particle counter), and are cheapest. Yes, a bit ugly, but one tends to tune that out.

For an ebook reader, keep in mind that reading usability requires a certain width. The problem with phone (portrait) dimensions is that the width is very narrow. My 6.5″ phone has a 2.75″ width while my 6″ Kindle paperwhite has 3.5″ width. The 6.8″ paperwhite (my children have) has a width just over 4″, which makes it easier to read, though the weight of those are 158 grams and 204 grams (without covers). The lighter ebook causes less strain, though the larger screens are much better with things like Manga.

Hope this helps,

All the best,

Jeff

Thank you, Jeff.

Yes, now I am looking for a bigger screen reader. Boox 6 inch e-reader seems to meet the criteria. It also has Android. I don’t need phone features, but I need an accessible OS where I can install a couple of apps like ebook reader, feed reader, etc.

I have been using the air purifiers from IKEA. They are under $100; I can hang them on the wall.

EPA* filters are about $7. I order a bunch of them together to save on shipping costs. I usually buy only EPA filters; if required, we can add an activated carbon filter.

* EPA12 filters have an efficiency of 99.5% for particles ≥0.3 microns. HEPA filters (H13 and H14) have efficiencies of 99.95% (H13) or 99.995% (H14) for the same particle size.